Tom Frieden’s 14 Studies Do Not Prove That Vaccines Do Not Cause Autism

November 23 | Posted by mrossol | Critical Thinking, Health, Math/Statistics, Science, VaccineI continue to believe that there is a causal relationship between many/most childhood vaccines (USA) and autism. One thing that seems absent is that if no causal relationship exists, then what WAS the cause of all [and each and every] cases of autism. mrossol

Source: Tom Frieden’s 14 Studies Do Not Prove That Vaccines Do Not Cause Autism

by James Lyons-Weiler, PhD | Popular Rationalism

Former CDC Director Tom Frieden made an unusual post on X.

His post included these two images:

We’ve seen these studies before. But now, from Frieden others have cited a small subset of studies—a tightly curated list of 14 papers—as definitive prof that vaccines do not cause autism.

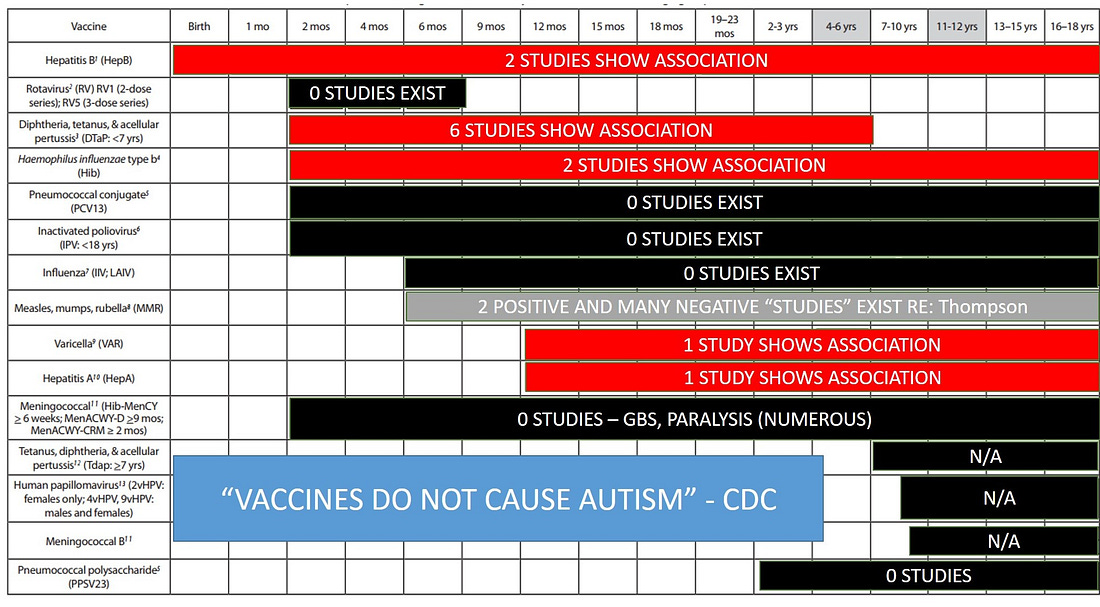

First problem: They left out of bunch of studies, and failed to report that not all vaccines have been tested for association with autism, and failed to report that association analyses are not critical tests of causality in the first place.

(My “Magic” slide from Life University, GA where the audience first learned that not all vaccines had been tested and that some positive results DID exist that linked vaccines and autism – 2015)

Beneath Frieden’s canned but polished talking points and “official” (e.g., AAP) institutional endorsements of the list of 14 lies a brittle and contradictory foundation. A closer examination reveals that not one of these 14 studies was equipped to test falsify (i.e., test to the point of rejecting it if it was false) the causal hypothesis. Each either used the wrong design, wrong comparison groups, or wrong statistical assumptions. What follows is a forensic critique of all 14 studies as presented in two pro-vaccine infographics presented by Frieden.

1. Hviid et al. (2019)

Claimed to show that MMR vaccination is not associated with autism, even in high-risk children. But the Danish registry study was a retrospective cohort design, not a randomized trial. Its sample was riddled with healthy-user bias: parents who saw adverse effects early were more likely to stop vaccinating. The study did not include a true unvaccinated group and did not examine cumulative vaccine exposures. Subgroup effects, such as genetic vulnerability or cofactor interactions, were not modeled at all. Odds ratios were averaged over a population already filtered by survivorship. Moreover, the study made no effort to validate diagnoses or vaccination records and failed to account for autism incidence delays relative to exposure windows. Collider bias.

2. Madsen et al. (2002)

One of the most widely cited studies in the vaccine-autism debate, and one of the most flawed. The authors removed outcome data from the year 2001 because it contradicted their conclusion (Hooker found this). They also manipulated how outpatient and inpatient cases were counted, creating an artificial rise in autism prevalence at a convenient moment. The average age of autism diagnosis was five, yet children as young as two were counted as “no autism.” The study suffered from cohort misclassification and lacked transparency in the data linkage. No true control group. No examination of dose-responsiveness. No mention of aluminum or thimerosal. Co-author Poul Thorsen was later indicted for embezzling CDC autism research funds and is currently being held by German authorities for extradition to the United States.

3. Taylor et al. (2014)

A meta-analysis often held up as the crown jewel of null findings. But it simply aggregated the same flawed studies as other reviews (including Madsen, Fombonne, and Price) and did not perform any new analysis of raw data. The authors did not account for the methodological flaws in the included studies. Crucially, they included no studies that found positive associations between vaccines and autism (see Magic slide above). The review was not neutral; it was confirmatory. Absence of evidence was misrepresented as evidence of absence. No evaluation of statistical power, covariate handling, or heterogeneity. Notably, Taylor included studies previously rejected by the Institute of Medicine.

4. Parker et al. (2004)

A narrative review of thimerosal-containing vaccines that drew heavily on studies later found to be underpowered or biased. The review presents the removal of thimerosal as a clean natural experiment, but never acknowledges that thimerosal remained in flu vaccines, and that aluminum-adjuvanted vaccines increased during the same period. The authors treat ecological trend data as if it could test causality, and fail to address the growing animal literature on thimerosal-induced neurotoxicity. The paper also misrepresents Verstraeten’s CDC work as definitive, despite internal correspondence from Verstraeten himself noting that his 2003 study was not evidence against an association, only inconclusive. Failed to report that not all vaccines were tested for association with autism.

5. Smeeth et al. (2004)

A UK case-control study with statistical sleight of hand. Adjusted for the age of enrollment in the medical database as a proxy for vaccination timing, which confounded rather than corrected. Raw data, when reanalyzed, shows a statistically significant association between MMR and autism. But by applying multiple covariate corrections and matching cases and controls in a way that diluted exposure differences, the authors rendered the association non-significant. The contingency table used in this study yields a chi-square value of 11.34, p = 0.00076—a significant result if not over-corrected.

6. Gidengil et al. (2021)

A systematic review funded by AHRQ that grouped together dozens of low-quality retrospective studies and used GRADE criteria to declare the evidence “high quality.” But GRADE was designed for clinical interventions, not for observational correlation studies that are structurally incapable of testing causality. The review includes no studies of vaccinated vs. never-vaccinated children, nor any studies that investigate aluminum, schedule density, or prenatal exposures. It also fails to note that several included studies (e.g., Destefano 2004, Price 2010) used limited datasets drawn from VSD with known statistical manipulation concerns. Failed to report that not all vaccines were tested for association with autism.

7. Maglione et al. (2014)

This AHRQ review is nearly identical in structure and conclusions to Gidengil, and suffers the same core flaw: it conflates consistency of null results with validity. Many included studies used overlapping datasets or shared authorship, which undermines independence. None were randomized. None stratified for subgroups. The authors report “no association” while acknowledging the absence of research in high-risk children, cumulative exposures, or gene-environment interactions. Publication bias is not examined despite stark differences between included and excluded study odds ratios. Failed to report that not all vaccines were tested for association with autism.

8. Dudley et al. (2020)

A meta-review that summarizes other systematic reviews, adding another layer of abstraction away from primary data. It includes the same flawed MMR and thimerosal studies, then declares the case closed. But the consistency it observes is a consistency of error: shared methodological blind spots, such as over-adjustment for covariates, failure to model interactions, and exclusion of vulnerable populations. No exploration of confounders-as-cofactors, nor any attempt to simulate or test potential causal pathways. Instead, the conclusion is policy-aligned rather than hypothesis-driven.

9. Halsey & Hyman (2001)

An early position paper emerging from an AAP conference, often cited as expert consensus. It is not a study. It presents no new data. It reviews a small number of trend studies and declares that the hypothesis of vaccine-induced autism is unsupported. But those trend studies (e.g., Dales et al., Stehr-Green) have been shown to rely on misleading graphs, insufficient time windows, and improperly defined exposure groups. The paper also neglects the biological plausibility emerging from animal studies and ignores early signals from adverse event reporting systems. Failed to report that not all vaccines were tested for association with autism.

10. Fombonne et al. (2001)

Claims to disprove a link between MMR and autism using Canadian data. But the thimerosal content of vaccines during the study period was not eliminated, only reduced. The study conflates changes in diagnosis and service availability with incidence. Autism prevalence rose, but was incorrectly attributed to “better awareness.” No raw vaccination records were confirmed. MMR coverage was assumed based on policy, not verified per subject. Cohort definitions used allowed for substantial overlap between vaccine formulations.

11. Peltola et al. (1998)

Cited as evidence that MMR is safe because no autism was diagnosed among 3 million children. But the authors never actually measured autism. They looked at adverse events within a 3-week window after vaccination and found no spike in hospital admissions. Autism, which typically emerges over months or years, would not appear in that window. Using this study to rule out causation is categorically invalid. It is functionally irrelevant to the autism hypothesis.

12. Honda et al. (2005)

A Japanese study claiming that autism increased even after MMR was withdrawn. What it fails to mention is that monovalent measles, mumps, and rubella vaccines were still used, just not in the same syringe. Total vaccine exposure did not decline. Meanwhile, diagnostic capture increased and other vaccines were added. The study design is ecological and lacks any individual-level data. Additionally, Japanese vaccine policy and reporting practices changed simultaneously, further confounding the inference. Table in paper changed post-publication without comment editorial annotation.

13. D’Souza et al. (2006)

Tested whether children with autism had persistent measles virus in their gut. Found none, and concluded MMR was safe. But this tests a single mechanistic hypothesis: one involving persistent infection. It does not and cannot rule out immunological or toxicological mechanisms. It also fails to distinguish between onset of gastrointestinal symptoms and the emergence of autistic behaviors. No other markers of immune dysregulation, inflammation, or adjuvant bioactivity were studied.

14. Hornig et al. (2008)

Similar to D’Souza. Looked for viral RNA in tissue samples from children with autism. Found no measles. But again, this does not address aluminum adjuvants, microglial priming, synergistic toxicity, or autoimmune activation. The absence of viral fragments is not the same as the absence of harm. This mechanistic null finding has been repeatedly misrepresented as general evidence of vaccine safety. Furthermore, the limited sample size and constrained analytic window undercut any generalizability.

Conclusion

Frieden’s list of 14 studies is not a triumph of evidence. It’s a lesson in circular citation, overreach, and misinterpretation. The public deserves more than slogan science. Autism is not a singular entity, and vaccines are not a single exposure. Until we study, using a mix of retrospective and prospective studies the total schedule effects, susceptible subgroups, gene-environment interactions, prediction accuracy of models that remove children from the schedule or specific vaccines based on risk stratification and cumulative toxicant load—using properly powered, prospective, randomized designs—we cannot claim to have tested, let alone rejected, the hypothesis that vaccines contribute to autism risk in some children.

Thank you for being a subscriber to Popular Rationalism. For the full experience, become a paying subscriber. And check out our awesome, in-depth, live full semester courses at IPAK-EDU. Hope to see you in class!

Leave a Reply

You must be logged in to post a comment.