How Sure Should We Be About Healthcare Interventions?

October 31 | Posted by mrossol | Critical Thinking, HealthSource: How Sure Should We Be About Healthcare Interventions?

A sobering paper about the success of healthcare interventions flew under the radar of attention this Spring.

Professor Jeremy Howick from the University of Oxford had the idea to estimate the fraction of healthcare interventions that are shown to be effective by high-quality evidence.

(Before your read on pause and make a guess in your head.)

Along with a group of colleagues they looked at a random sample of nearly 2500 Cochrane Reviews published in the last 15 years. Most experts consider Cochrane Reviews the gold standard of medical evidence

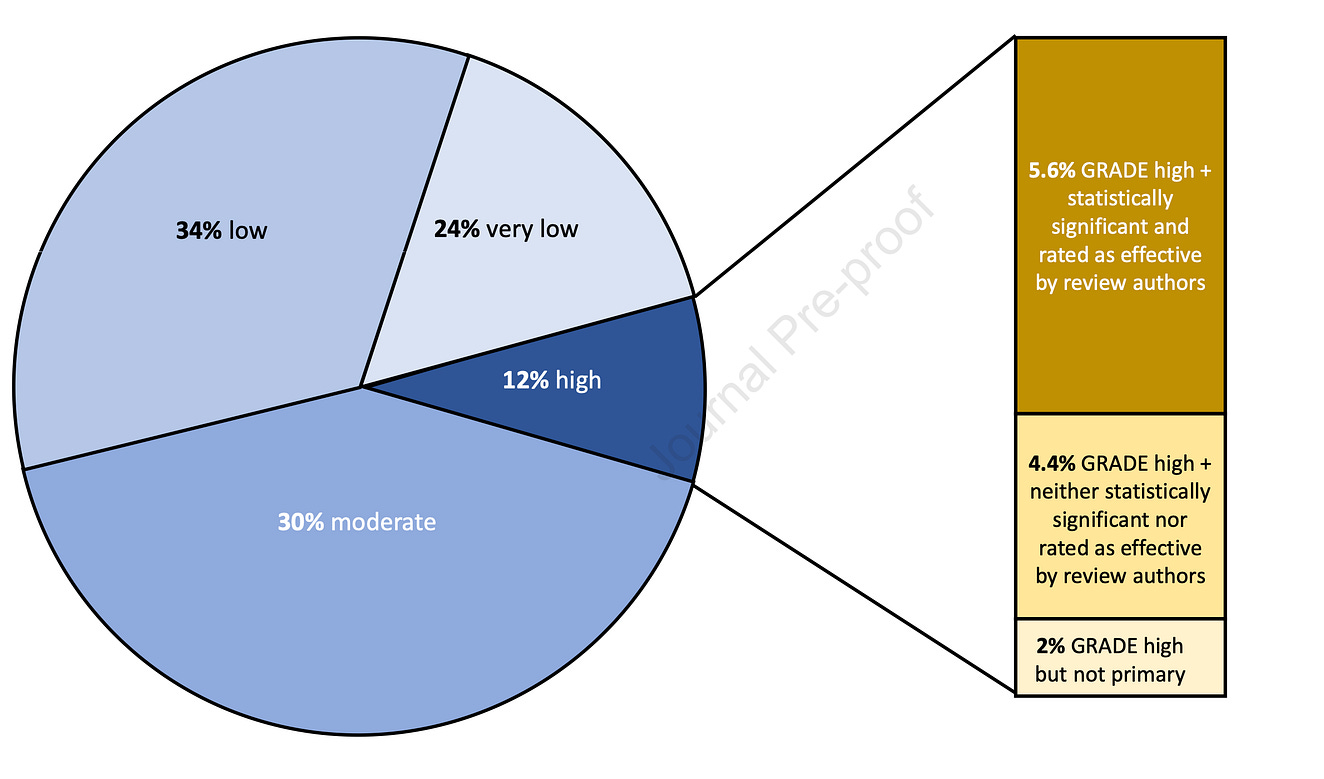

Howick and colleagues studied interventions that were compared with placebo or no treatment and whose evidence quality were rated using something called the GRADE standard. GRADE specifies four levels of the certainty for a body of evidence for a given outcome: high, moderate, low and very low.

Then they calculated the fraction of interventions that met three criteria: a) efficacy based on high-quality evidence; b) statistically significant positive effects and c) were judged as beneficial by the Cochrane reviewers. They also looked at the fraction of interventions in which harms were studied.

Sit down for the results:

- Only 6% of eligible interventions (n = 1567) met all three criteria of high-quality evidence and statistically significant positive effects enough to convince reviewers that it was beneficial.

- Harms were only measured in about a third of interventions, of which 8% had statistically significant evidence of harm.

- Among the interventions that did not have any outcomes with high quality evidence, the highest-GRADE rating was moderate in 30%, low in 34.0%, and very low in 24%.

Comments:

When you go to the doctor and undergo a test or procedure, or take a new medication, most of us expect that there’s darn good support for it.

This paper suggests that it’s not true.

When I started practice, this data would have surprised me. But as I’ve gathered experience in both practice and in the review of medical evidence, I am no longer surprised.

Everyone is far too confident.

Doctors prescribe a drug, and the patient improves. We ascribe the improvement to the drug. But we don’t know if the patient improved because of the prescription or despite it. When it’s an antibiotic, drawing such a conclusion is fair, but most drugs are unlike antibiotics.

Procedures are even more likely to fool our cause-seeking brains. A doctor does a procedure that induces a change in something that can be seen—a stent makes an angiogram look smoother; an ablation vanquishes a cardiac signal.

The visual feedback makes us feel successful; we get a rush of dopamine. If the patient improves, we make a causal connection between the visual feedback and the success. But we don’t know if the improvement is because of the procedure or despite it.

That’s where evidence comes in. If an intervention truly causes improvement, evidence bears it out. Many therapies we thought were successful ended up as mirages in our brains.

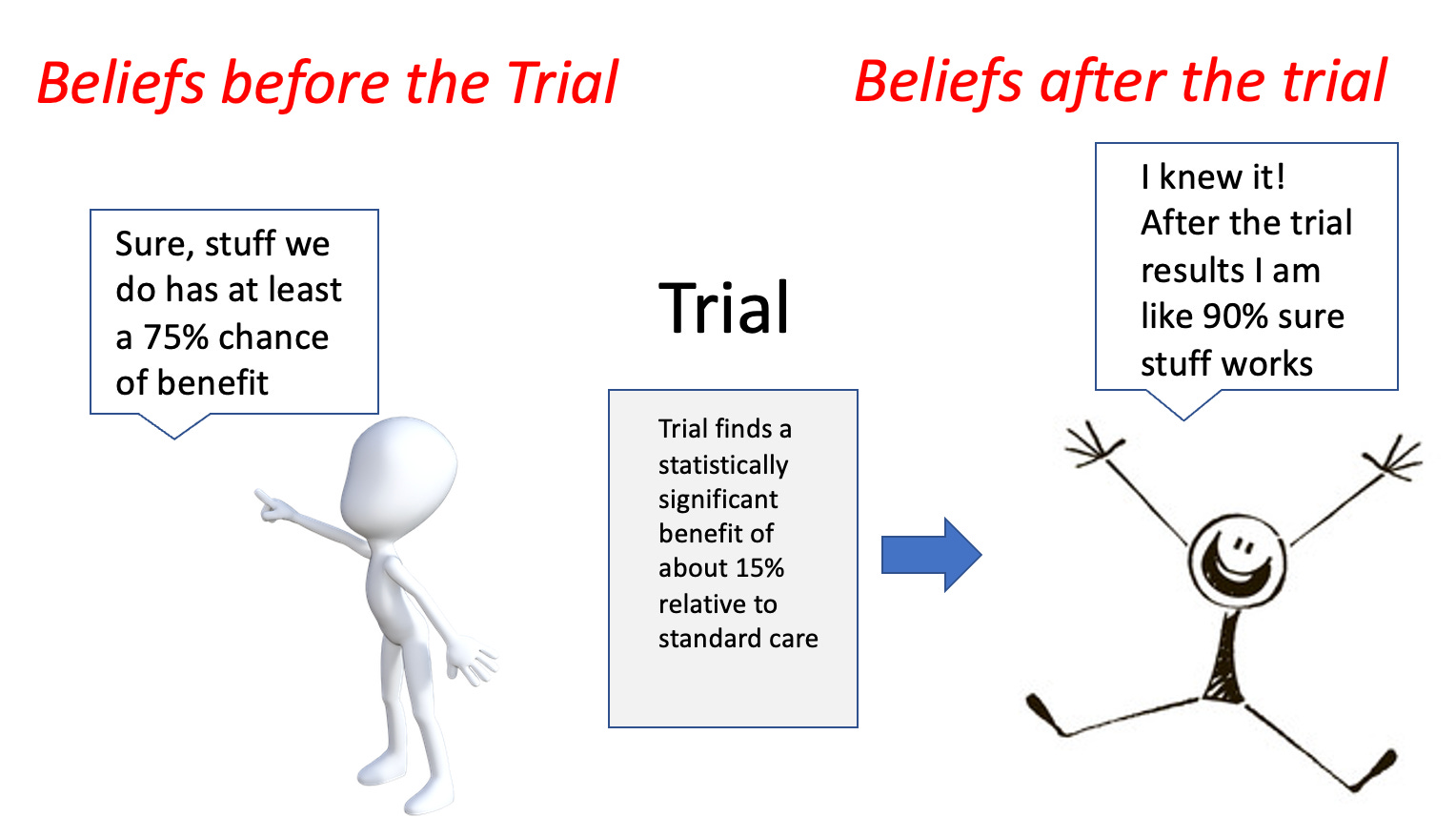

Here are some pictures to show why our prior beliefs matter. Think of trials as medical tests: they almost never give a definitive answer. Rather, trials modify our prior beliefs.

A typical “positive” cardiology trial often finds a 10-20% improvement in a new therapy.

The first picture below shows the before and after beliefs if we start with a gullible prior of more than 75% chance of things working.

See: if you start with overly optimistic priors, you end up cocksure that healthcare interventions most always work.

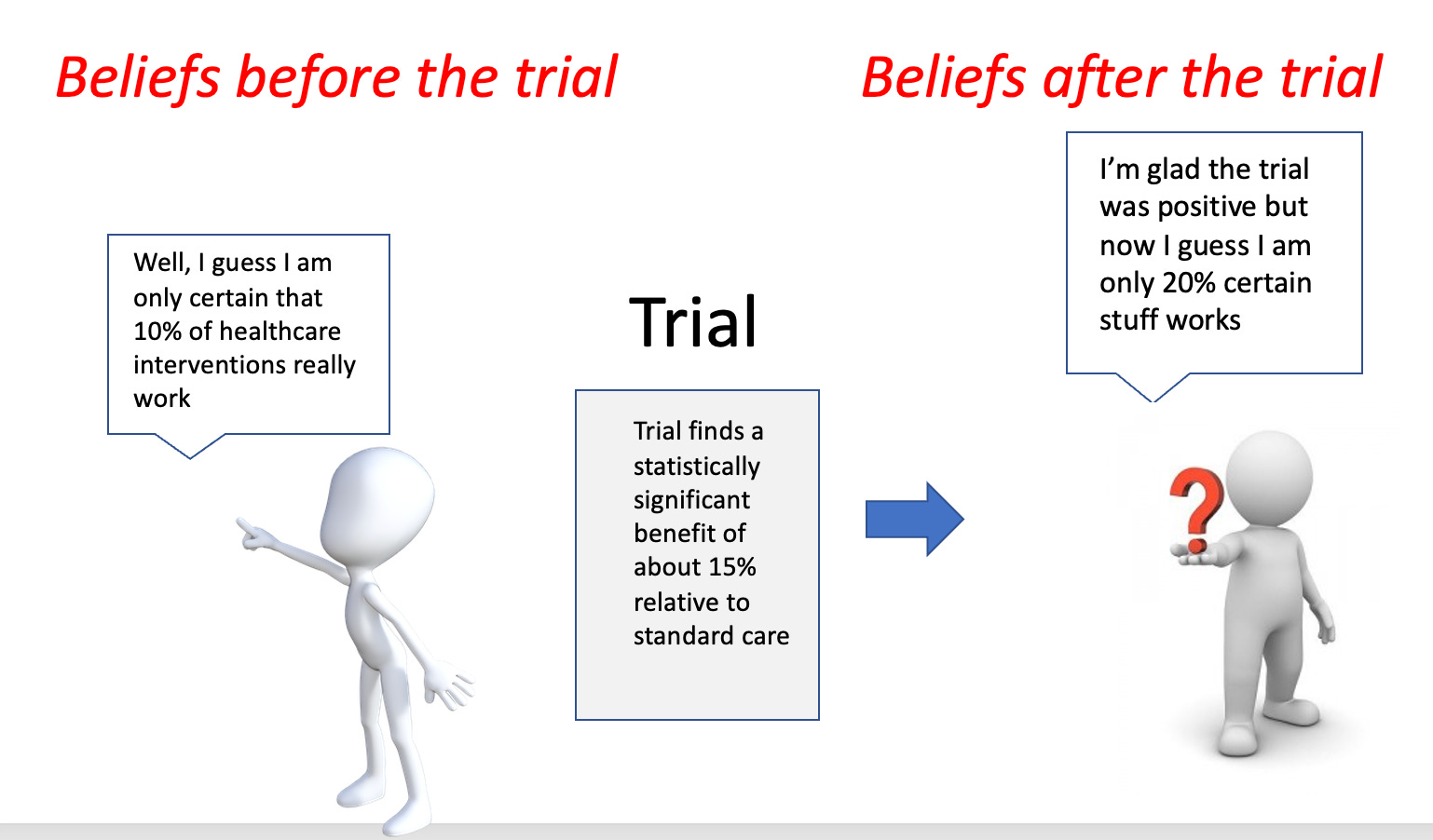

Now look what happens if you use the evidence from the Howick et al paper, where less than 10% of interventions have high-quality, statistically robust findings of benefit.

The *same* trial has a much different effect.

Conclusion:

I love being a doctor. I believe we help people. But one of our greatest foes is over-confidence.

Healthcare is hard. The human body is complex. Most stuff doesn’t work.

Patients and doctors alike would do better to be far more skeptical. Not cynical, just skeptical.

This would lead us to want stronger evidence for our interventions—especially when there is harm or high cost involved.

Then we would be less likely to adopt therapies that don’t work, or worse, harm people we are supposed to help.

You’re a free subscriber to Sensible Medicine. For the full experience, become a paid subscriber.

Leave a Reply

You must be logged in to post a comment.